This article presents a recipe for test driving microservices. An icy treat that can help us write tests that will keep us shipping.

Testing recipes are fun, but I'm not here to mock the importance of test automation. Getting confidence in a change, and swift paths to production are totally essential for high performing teams. Ask DORA!

Test automation is essential

Most readers here know that good tests are hard to write, and even harder to keep.

We need feedback to verify the quality of a change. Good tests are a massive positive productivity shifter. Get it wrong and you live in a world of fragility, and become hostages to your tests.

Before we look into how I avoid some of these problems, let’s review popular practice.

What are the popular test recipes?

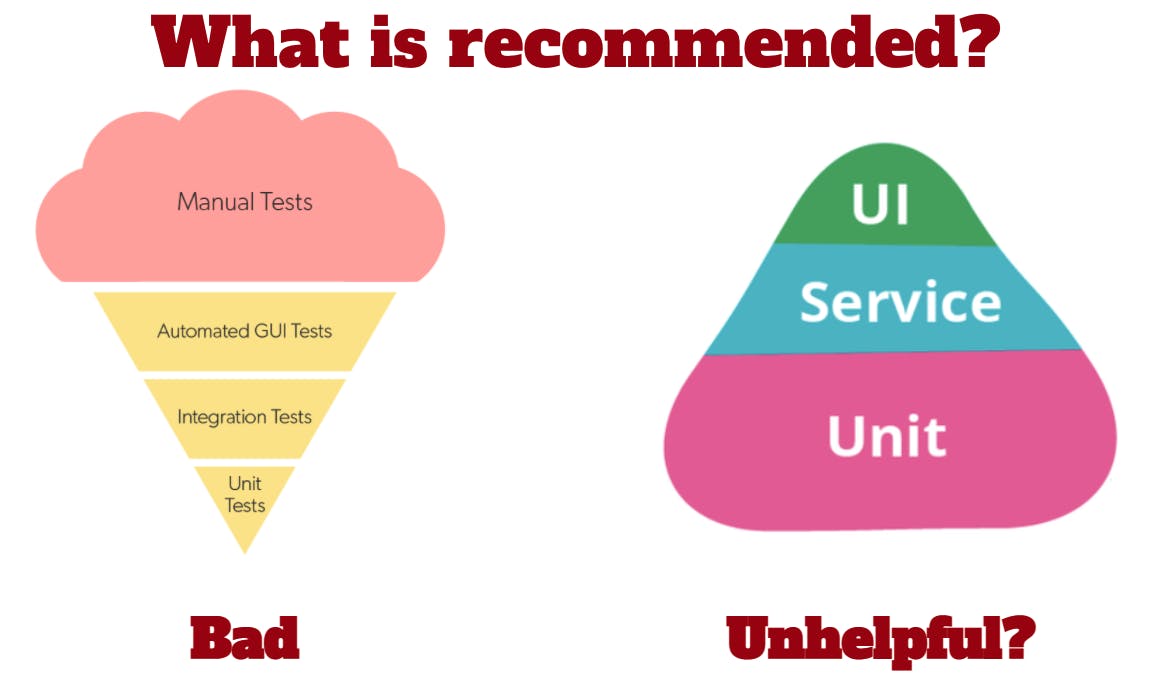

You may well have seen and used the above advice - both images are rightly popular.

The first - the Software Testing Ice-Cream Cone - is an anti-pattern. It’s super advice on what to avoid to keep your software delivery sustainable and high quality.

The second - the famous testing pyramid, has been popular and really useful for years. However I don’t think it always works in the microservice domain. It can lead our code into unhelpful places: tests that are coupled to design and hard to change.

So what should we do?

Ice cream headache?

Let's step back, check our heads and ask: What is a unit test anyway?

Looking around the web - you will find many definitions that say something like:

"Smallest piece of testable software, tested in isolation to determine if its behaviour is as you would expect"

Small is important

Small brings our focus in, to get fast, precise feedback. It can influence our design to drive lower coupling and to bring our attention to what behaviour is important.

How small? Good question.

Often doctrine becomes dogma and we forget ‘small’ is just one of the constraints on our test code.

Tests should provide safety for change. Too big and it won’t fit in your head and help you focus; too small and the test can't help to be coupled to implementation, and we lose safety.

Behaviour is a critical word in testing. If we test units of behaviour, presented by a set of collaborating classes: we get tests that support change better.

No matter the size of the unit, we want our tests and TDD to drive out a good design with independent decoupled parts.

So, what makes a great Unit under test?

Code that:

- Does one job

- Independent

- Loosely coupled

Offers a clear interface we can test

And...

Fits in your head (Credit - Daniel Terhorst-North)

Sounds like a definition of a microservice

If we treat our microservice as the unit under test, what happens?

- We'll know that the microservice keeps doing what we need it to

- We'll have documentation on what the service does - to help us hold it in our heads

- We can test its independence from other services and observe the interaction

- Design as we test, as we code => Test Driven microservices

A recipe for success

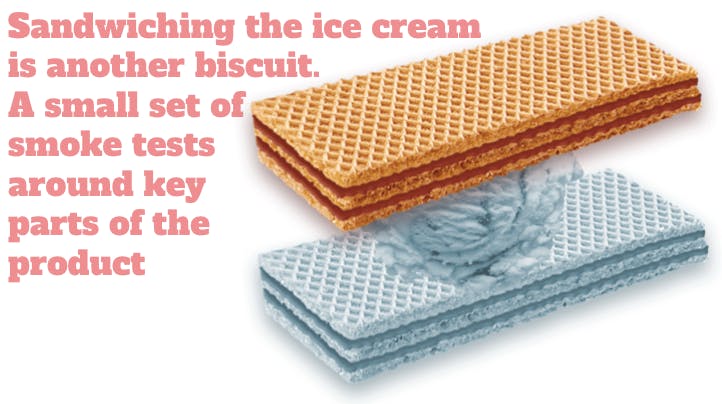

Alright, now I’ve waffled for a while - here’s the recipe for how I test my microservices.

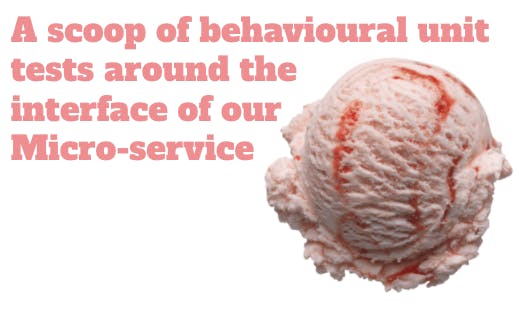

Step 1: Start with the key ingredient that holds everything together

The majority of my tests exercise the service as a whole, setting expectations against the service’s interface.

This means I’m testing the code, modules and libraries together through the same API that consumers will use. Usually HTTP, plus received and dispatched async events.

I isolate it from collaborators using a HTTP mocking framework like Nock or Hoverfly .

By setting expectations of the service’s behaviours, I get feedback on the things I really care about. I also gain the ability to refactor the implementation whilst retaining runnable tests that assert it still does its job. They are also totes handy as service documentation.

I often add in some juicy morsels of UI component testing. The above tests aren’t fully exercising any web based UI or SPA the service might provide, and I like quick feedback here also. I write react component tests , some of my co-workers use Storybook .

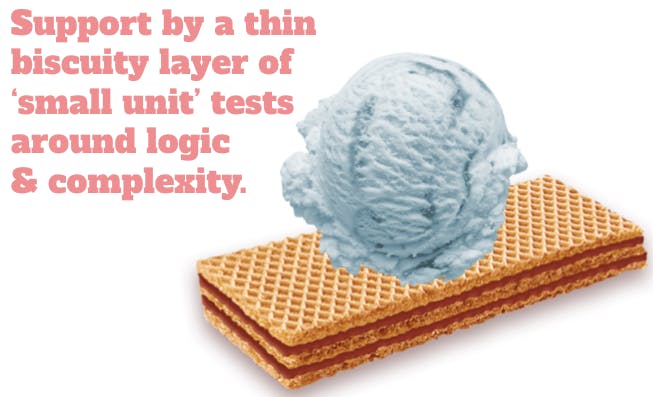

Step 2: Support complexity with focused testing.

If your microservice does something complex - and most do - there will be places where service level tests alone won’t exercise the code enough.

Where I have a lot of necessary and important complexity I write unit tests around a single module or class that does the heavy lifting. I try very hard to avoid mocks or other test doubles for these.

This stops service test bloat. If you are writing many similar service level tests, its a smell that there is some logic that could be better covered in a set of smaller simpler cases.

Cover that complexity well. A future developer will thank you!

Step 3: Add a protective top layer

Testing collaborators together gives you confidence that your product is going to work for your users. I focus a small set of smoke tests on the critical areas of my product I really want to have confidence in before I ship.

I don’t go overboard with these - they are costly to maintain. Often I choose areas that I can’t cover in other simpler tests but especially areas that are too important to not break in Production.

Step 4: Ship often and pair with Testing in Prod

I ensure my butt is properly covered by both intentional Testing in Production and having products that degrade gracefully. (Recipes for another blog post)

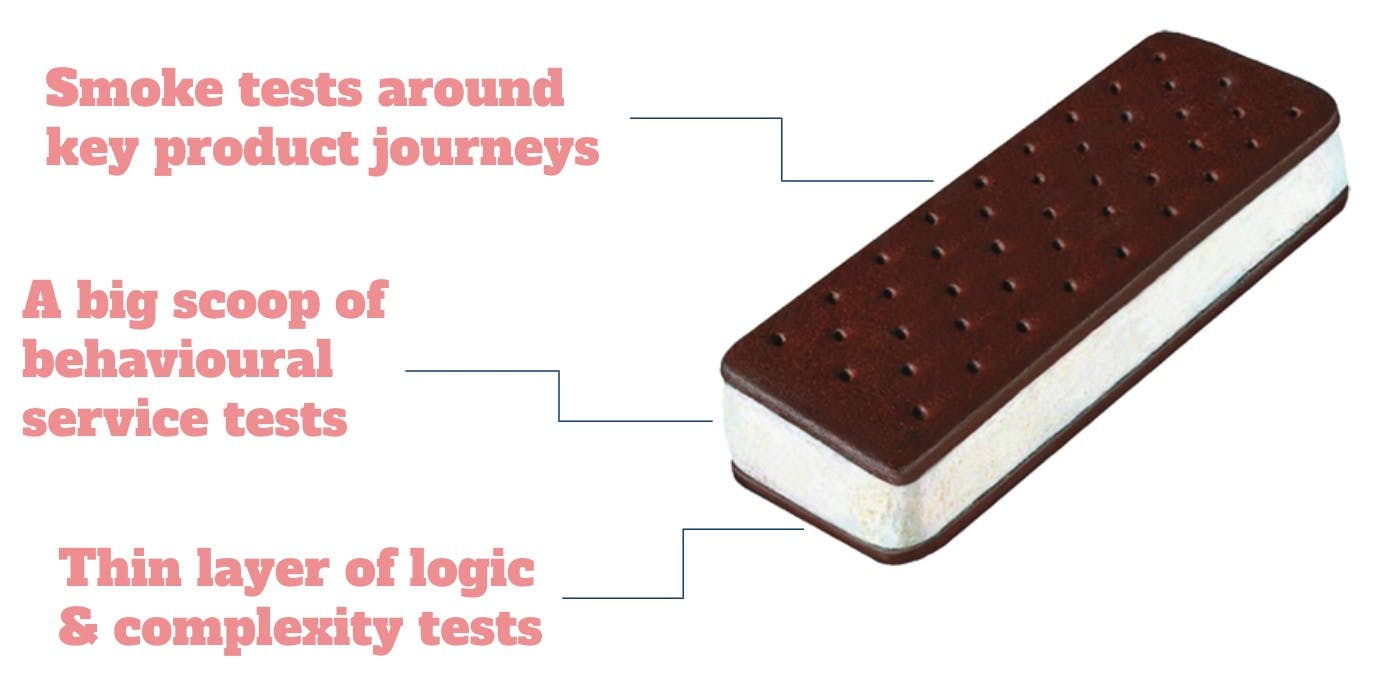

So when I’m testing a microservice - I think of an ice cream sandwich

I'm not the only one to think this. Kent C Dodds has written on the subject. Brian Elgaard Bennett digs deep on how Given in BDD drives thinking about what behaviour to verify next. Folks at Spotify shape their tests as a Honeycomb

What shape are the tests around your microservices?